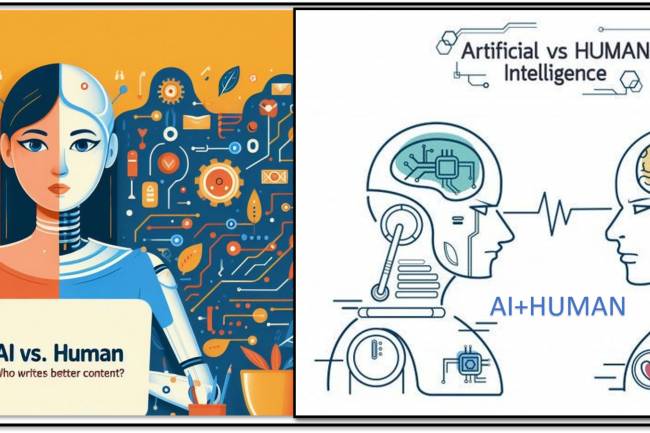

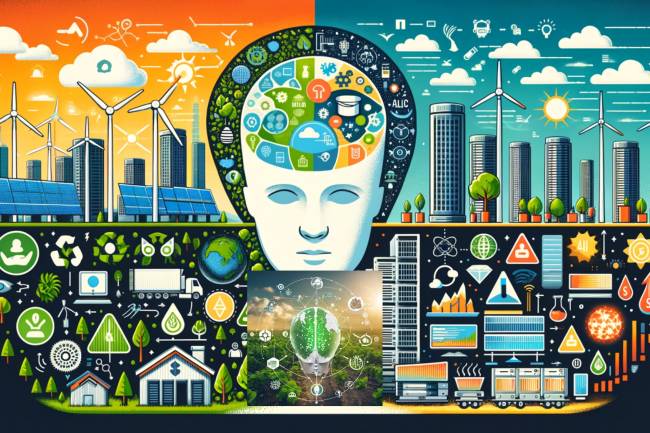

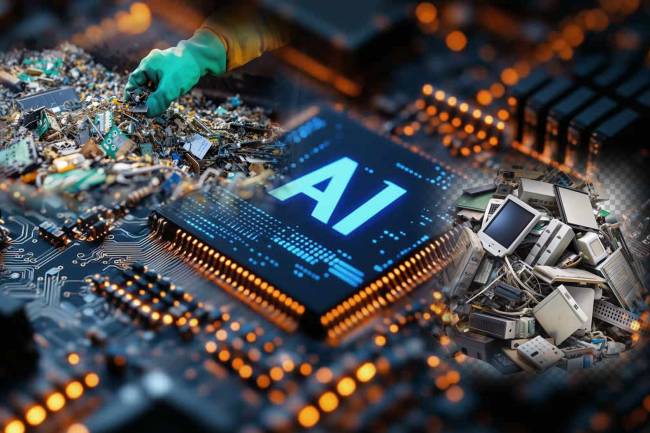

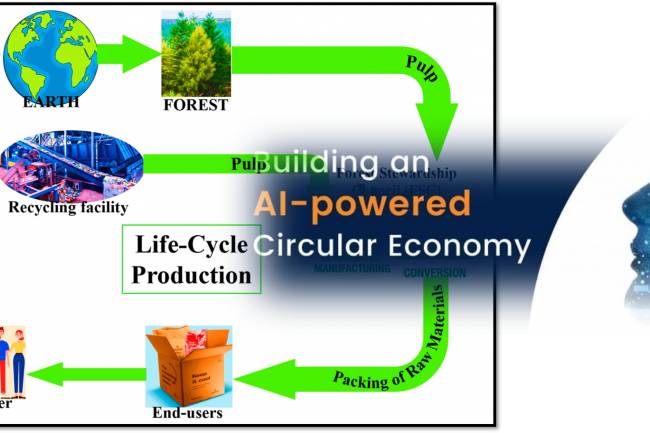

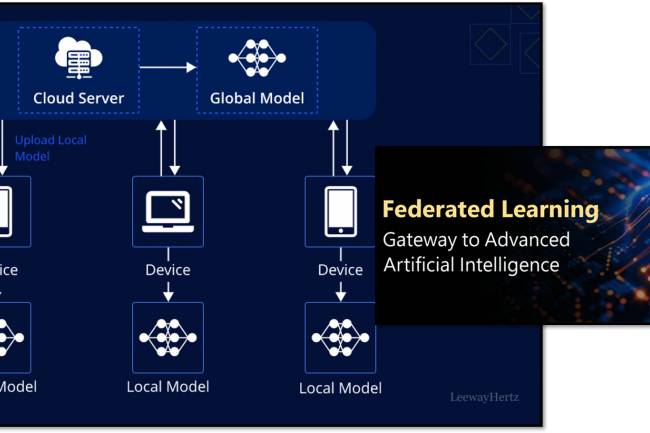

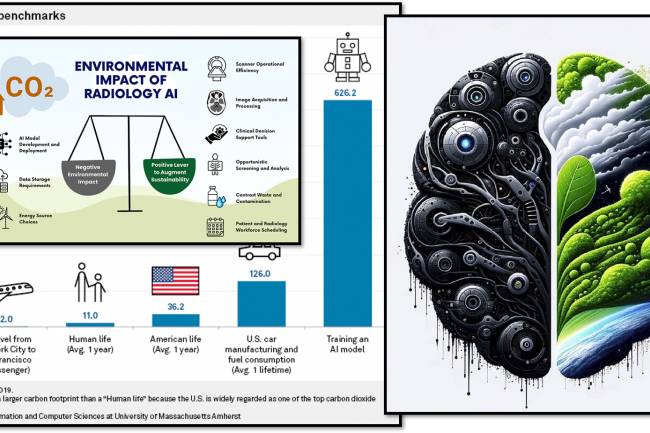

Sustainable AI means developing and using artificial intelligence in a way that minimizes its negative environmental, social and ethical impacts and maximizes its positive contributions to a sustainable future.

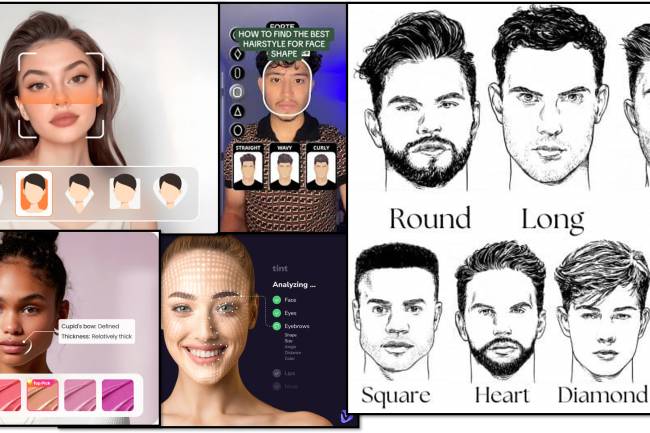

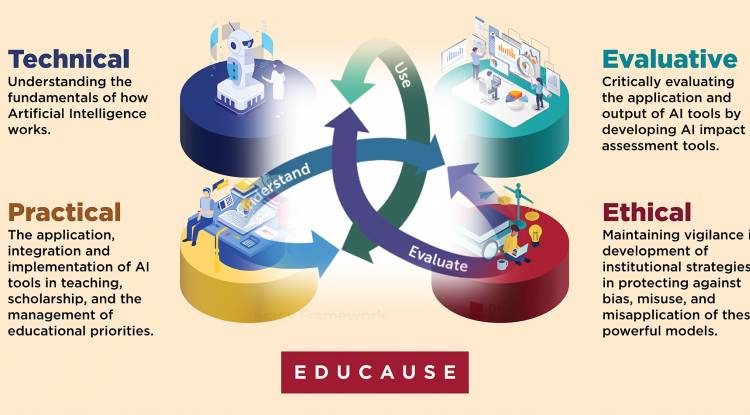

AI literacy is the ability to understand, use, and critically evaluate artificial intelligence systems, including their benefits, risks, and ethical implications.

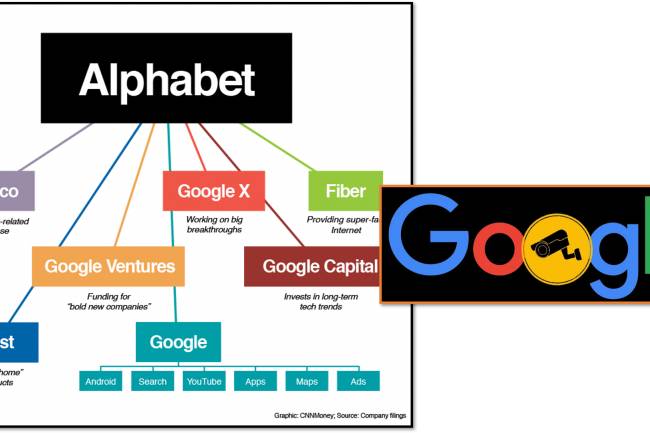

Diverse stakeholders (governments, businesses, civil society, etc.) must collaborate in the development and implementation of AI to address ethical concerns, build trust, foster innovation, and ensure the benefit of AI for all humanity.

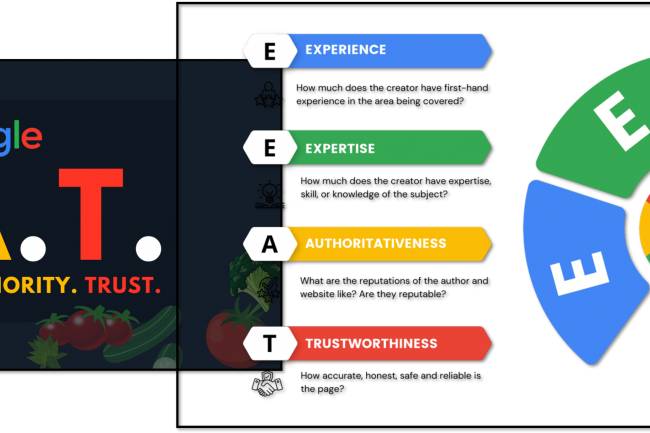

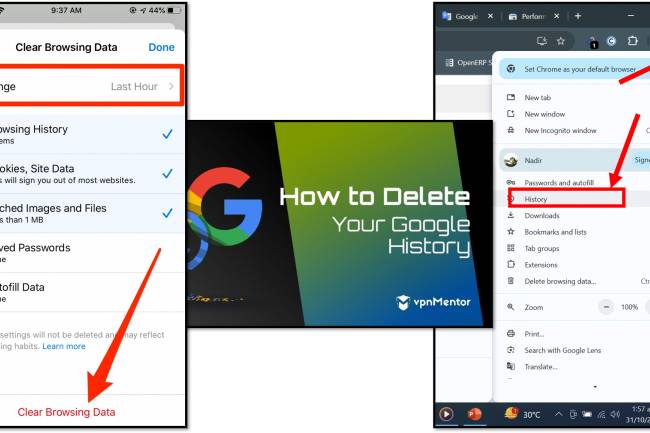

AI regulation and ethics: Laws and policies ensure AI is fair, transparent, and safe. Key areas: data privacy, bias prevention, accountability, and ethical use of AI. Governments and organizations develop guidelines to regulate AI responsibly.

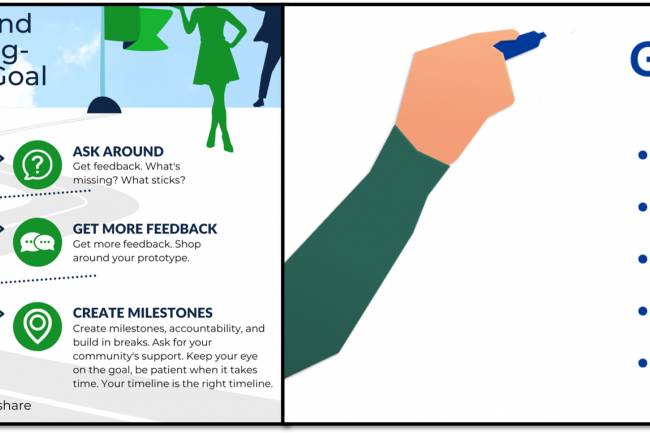

Public awareness and education about AI Public awareness and education about AI are essential to ensure that people understand its benefits, risks and ethical implications. Key aspects include: AI literacy programs: Schools, universities and online platforms offer courses to teach students and professionals the basics of AI. Media and outreach: Governments and organizations use social media, news and public campaigns to inform people about AI developments.

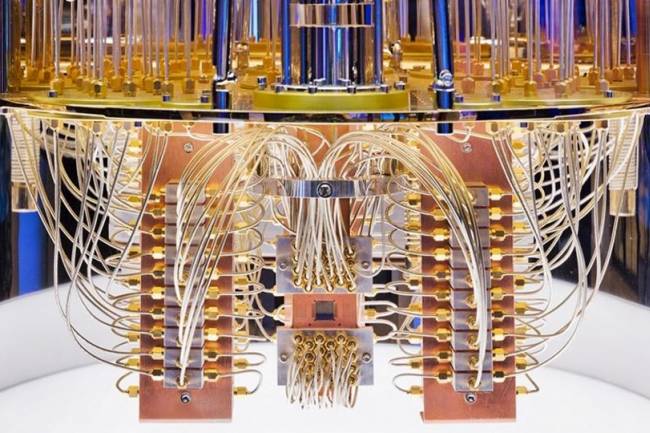

Minds were ignited in labs around the world. Algorithms were unlocked, code was sung. AI research organizations, hubs of innovation, gave birth to the future, one line of code at a time.